Now that the project has officially ended, it is a good opportunity to reflect on our key research advancements, as evidenced by our publications.

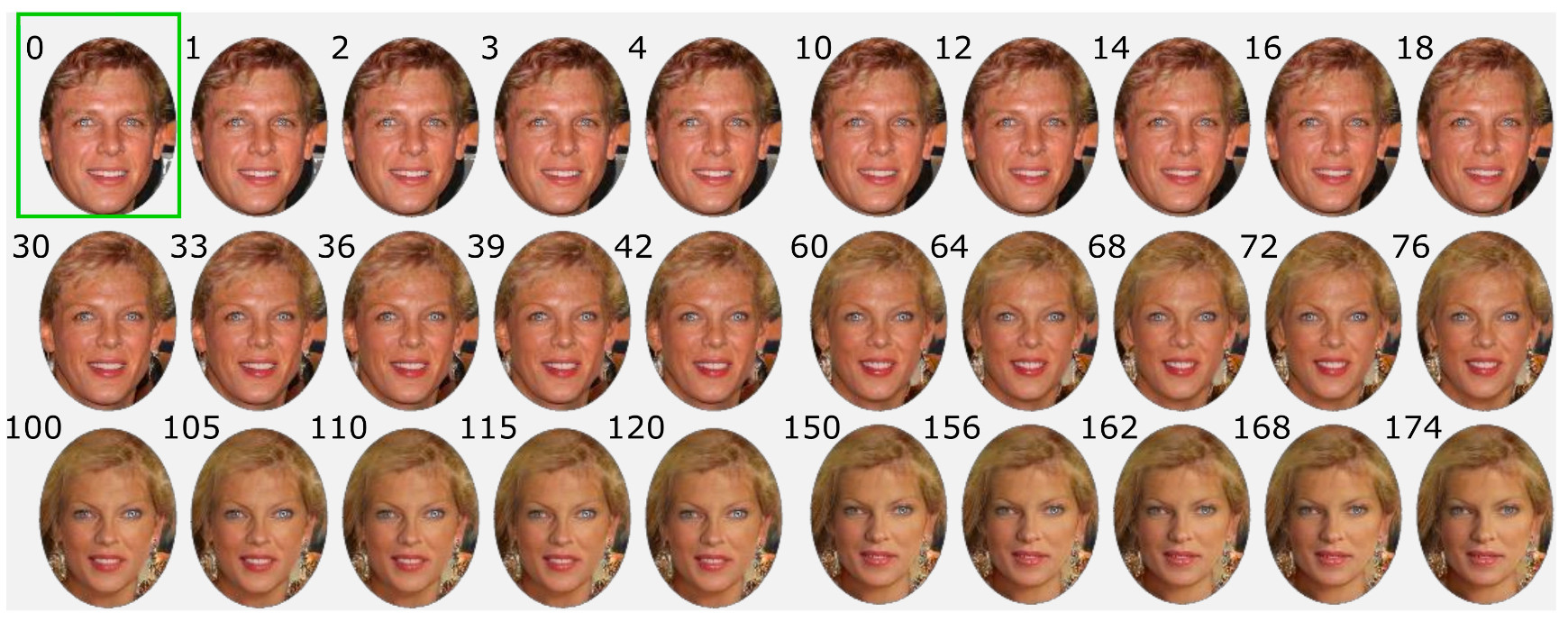

Temporal consistency estimates based on neural networks for gaze and visual data

This is one of our key achievements, where a baseline convolutional neural network proved effective in learning to predict the attention score as defined in previous work. We have published our results in Brainsourcing for Temporal Visual Attention Estimation, in the prestigious Biomedical Engineering Letters journal [1].

Late and early fusion of multimodal representations of imagery data

This achievement was reported in the research paper The Elements of Visual Art Recommendation: Learning Latent Semantic Representations of Paintings [2], presented at the ACM conference on human factors in computing systems (CHI), the top conference in Human-Computer Interaction, and in the follow-up paper Together Yet Apart: Multimodal Representation Learning for Personalised Visual Art Recommendation [3], presented at the ACM Conference on User Modeling, Adaptation, and Personalization (UMAP), the oldest international conference for researchers and practitioners working on user-adaptive computer systems.

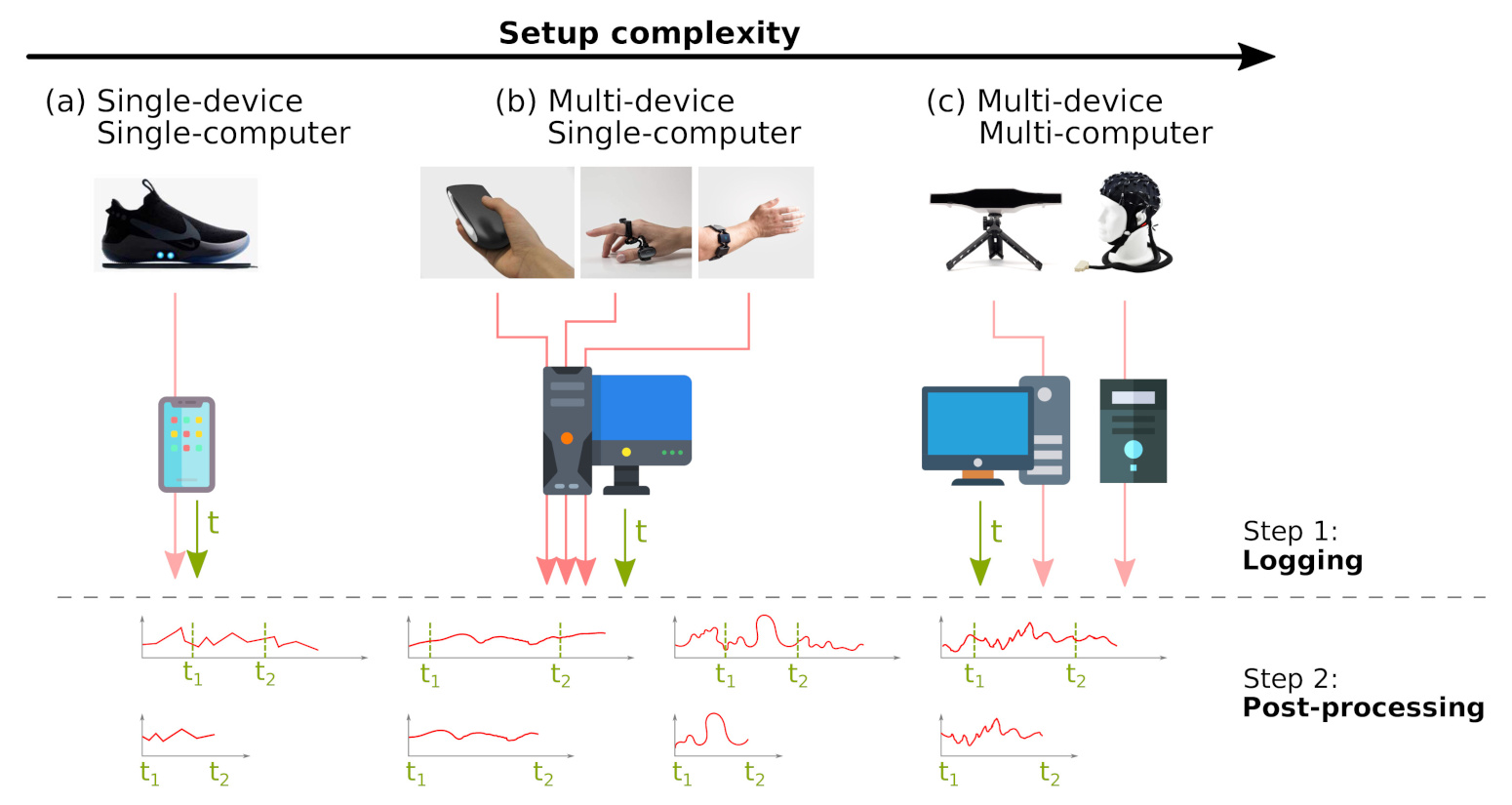

Multi-device multi-computer offline signal synchronization software

This achievement was reported in the research paper Gustav: Cross-device Cross-computer Synchronization of Sensory Signals [4], presented at the ACM Symposium on User Interface Software and Technology (UIST), the premier forum for software innovations in Human-Computer Interaction. We have recently published an improved system that we have called Thalamus [5] in the ACM UMAP’25 conference, to be presented in June in New York, USA.

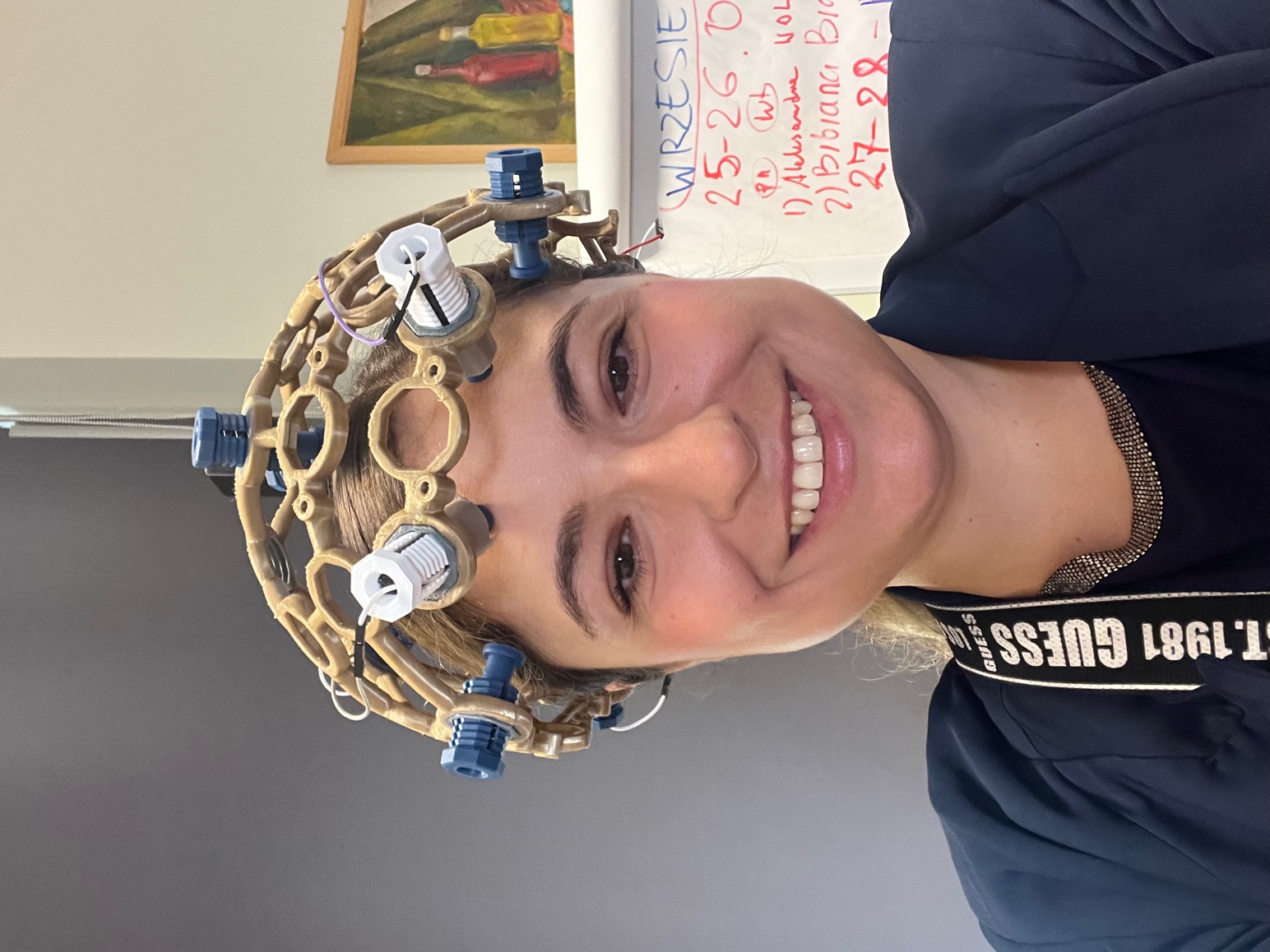

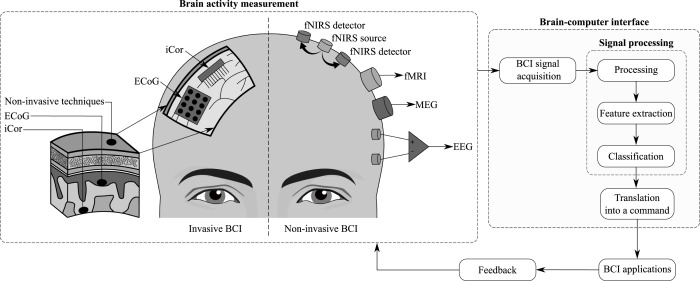

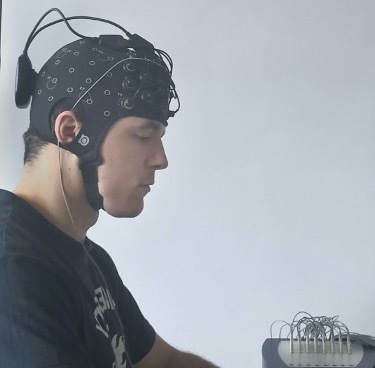

Crowdsourcing via brain-computer interfacing

This has been addressed in two different settings: on the one hand, for fNIRS signals elicited from static (image) stimuli; and on the other hand on EEG signals elicited from dynamic (video) stimuli. The effectiveness of crowdsourcing mechanisms has been demonstrated. The first work on images+fNIRS has already been published in the top journal in Affective Computing: Crowdsourcing Affective Annotations via fNIRS-BCI [6].

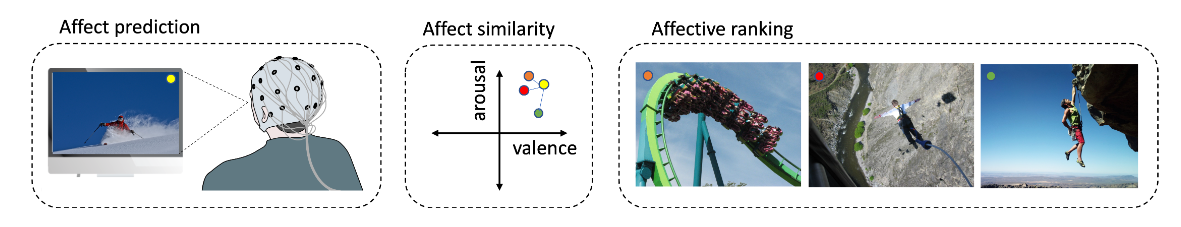

Information retrieval based on emotions captured via brain-computer interfacing

This achievement was reported in Feeling Positive? Predicting Emotional Image Similarity from Brain Signals [7], presented at the ACM Multimedia, one of the best conferences in Computer Science (ranked as CORE A*, top 7% of all CS conferences).

Generative model control and conditioning via brain input

Generative models controlled by brain input are now a reality, as we reported in Cross-Subject EEG Feedback for Implicit Image Generation [8], Perceptual Visual Similarity from EEG: Prediction and Image Generation [9] and Brain-Computer Interface for Generating Personally Attractive Images [10].

Semantic similarity estimation via brain-computer interfacing

This achievement was reported in Affective Relevance: Inferring Emotional Responses via fNIRS Neuroimaging [11], presented at the ACM conference on Research and Development in Information Retrieval (SIGIR), the premier forum for researchers and practitioners interested in data mining and information retrieval research. In addition, the basic research underlying this achievement was reported in The P3 indexes the distance between perceived and target image [12], published in the Psychophysiology journal.

Consistency estimates of brain signals

Consistency is closely related with the crowdsourcing concept (see below) but can also have additional perspectives such as temporal consistency, which we have also worked on. This is an incredibly challenging task due to the limited data available and the great inter- and intra-subject variability of brain signals. We have published our results in Affective annotation of videos from EEG-based crowdsourcing [13], in the Pattern Analysis and Applications journal (in press).

References

- Y. Moreno-Alcayde, T. Ruotsalo, L. A. Leiva, V. J. Traver. Brainsourcing for temporal visual attention estimation. Biomed. Eng. Lett. 15, 2025. https://doi.org/10.1007/s13534-024-00449-1

- B. A. Yilma, L. A. Leiva. The Elements of Visual Art Recommendation: Learning Latent Semantic Representations of Paintings. Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI), 2023. https://doi.org/10.1145/3544548.3581477

- B. A. Yilma, L. A. Leiva. Together Yet Apart: Multimodal Representation Learning for Personalised Visual Art Recommendation. Proceedings of the 31st ACM Conference on User Modeling, Adaptation and Personalization (UMAP), 2023. https://doi.org/10.1145/3565472.3592964

- K. Latifzadeh, L. A. Leiva. Gustav: Cross-device Cross-computer Synchronization of Sensory Signals. Adjunct Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology (UIST Adjunct), 2022. https://doi.org/10.1145/3526114.3558723

- K. Latifzadeh, L. A. Leiva. Thalamus: A User Simulation Toolkit for Prototyping Multimodal Sensing Studies. Adjunct Proceedings of the 33rd ACM Conferfence on User Modeling, Adaptation and Personalization (UMAP), 2025. In Press.

- T. Ruotsalo, K. Mäkelä, M. Spapé. Crowdsourcing Affective Annotations via fNIRS-BCI. IEEE Trans. Affect. Comput. 15(1), 2024. https://doi.org/10.1109/TAFFC.2023.3273916

- T. Ruotsalo, K. Mäkelä, M. M. Spapé, L. A. Leiva. Feeling Positive? Predicting Emotional Image Similarity from Brain Signals. Proceedings of the 31st ACM International Conference on Multimedia (MM), 2023. https://doi.org/10.1145/3581783.3613442

- C. de la Torre-Ortiz, M. M. Spapé, N. Ravaja, T. Ruotsalo. Cross-Subject EEG Feedback for Implicit Image Generation. IEEE Trans. Cybern. 54(10), 2024. https://doi.org/10.1109/TCYB.2024.3406159

- C. de la Torre-Ortiz, T. Ruotsalo. Perceptual Visual Similarity from EEG: Prediction and Image Generation. Proceedings of the 31st ACM International Conference on Multimedia (MM), 2024. https://doi.org/10.1145/3664647.3685508

- M. Spapé, K. M. Davis, L. Kangassalo, N. Ravaja, Z. Sovijärvi-Spapé, T. Ruotsalo. Brain-Computer Interface for Generating Personally Attractive Images. IEEE Trans. Affect. Comput. 14(1), 2023. https://doi.org/10.1109/TAFFC.2021.3059043

- T. Ruotsalo, K. Mäkelä, M. M. Spapé, L. A. Leiva. Affective Relevance: Inferring Emotional Responses via fNIRS Neuroimaging. Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR), 2023. https://doi.org/10.1145/3539618.3591946

- C. de la Torre-Ortiz, M. Spapé, T. Ruotsalo. The P3 indexes the distance between perceived and target image. Psychophysiol. 60(5), 2023. https://doi.org/10.1111/psyp.14225

- Y. Moreno-Alcayde, T. Ruotsalo, L. A. Leiva, V. J. Traver. Affective annotation of videos from EEG-based crowdsourcing. Pattern Analysis and Applications, 2025. In Press.