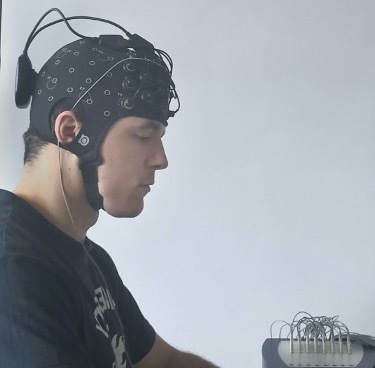

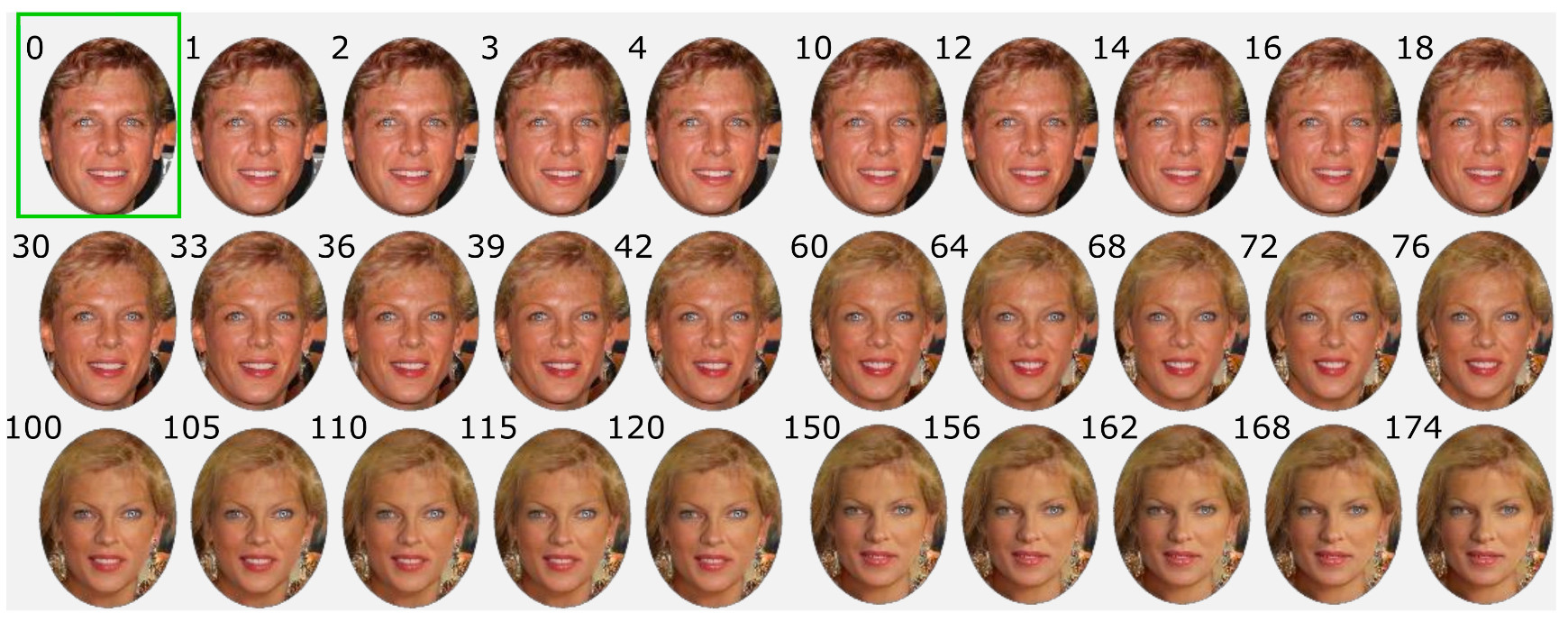

Visual recognition requires inferring the similarity between a perceived object and a mental target. However, a measure of similarity is difficult to determine when it comes to complex stimuli such as faces. Indeed, people may notice someone “looks like” a familiar face, but find it hard to describe on the basis of what features such a comparison is based. Previous work shows that the number of similar visual elements between a face pictogram and a memorized target correlates with the P300 amplitude in the visual evoked potential. Here, we redefine similarity as the distance inferred from a latent space learned using a state-of-the-art generative adversarial neural network (GAN). A rapid serial visual presentation experiment was conducted with oddball images generated at varying distances from the target to determine how P300 amplitude related to GAN-derived distances.

The results showed that distance-to-target was monotonically related to the P300, showing perceptual identification was associated with smooth, drifting image similarity. Furthermore, regression modeling indicated that while the P3a and P3b sub-components had distinct responses in location, time, and amplitude, they were similarly related to target distance. The work demonstrates that the P300 indexes the distance between perceived and target image in smooth, natural, and complex visual stimuli and shows that GANs present a novel modeling methodology for studying the relationships between stimuli, perception, and recognition.

REFERENCE

de la Torre‐Ortiz, C.; Spapé, M.; Ruotsalo, T. (2023). The P3 indexes the distance between perceived and target image. Psychophysiology, e14225. https://doi.org/10.1111/psyp.14225