We aim for a scientific breakthrough by proposing the first-of-its-kind affective visual attention annotation via crowdsourced BCI signals

The project offers an ambitious perspective that integrates BCI technology and introduces a novel affective component

Attention estimation and annotation are tasks aimed at revealing which parts of some content are likely to draw the users’ interest. Previous approaches have tackled these incredibly challenging tasks using a variety of behavioral signals, from dwell-time to clickthrough data, and computational models of visual correspondence to these behavioral signals. However, the signals are rough estimations of the real underlying attention and affective preferences of the users. Indeed, users may attend to some content simply because it is salient, but not because it is really interesting, or simply because it is outrageous.

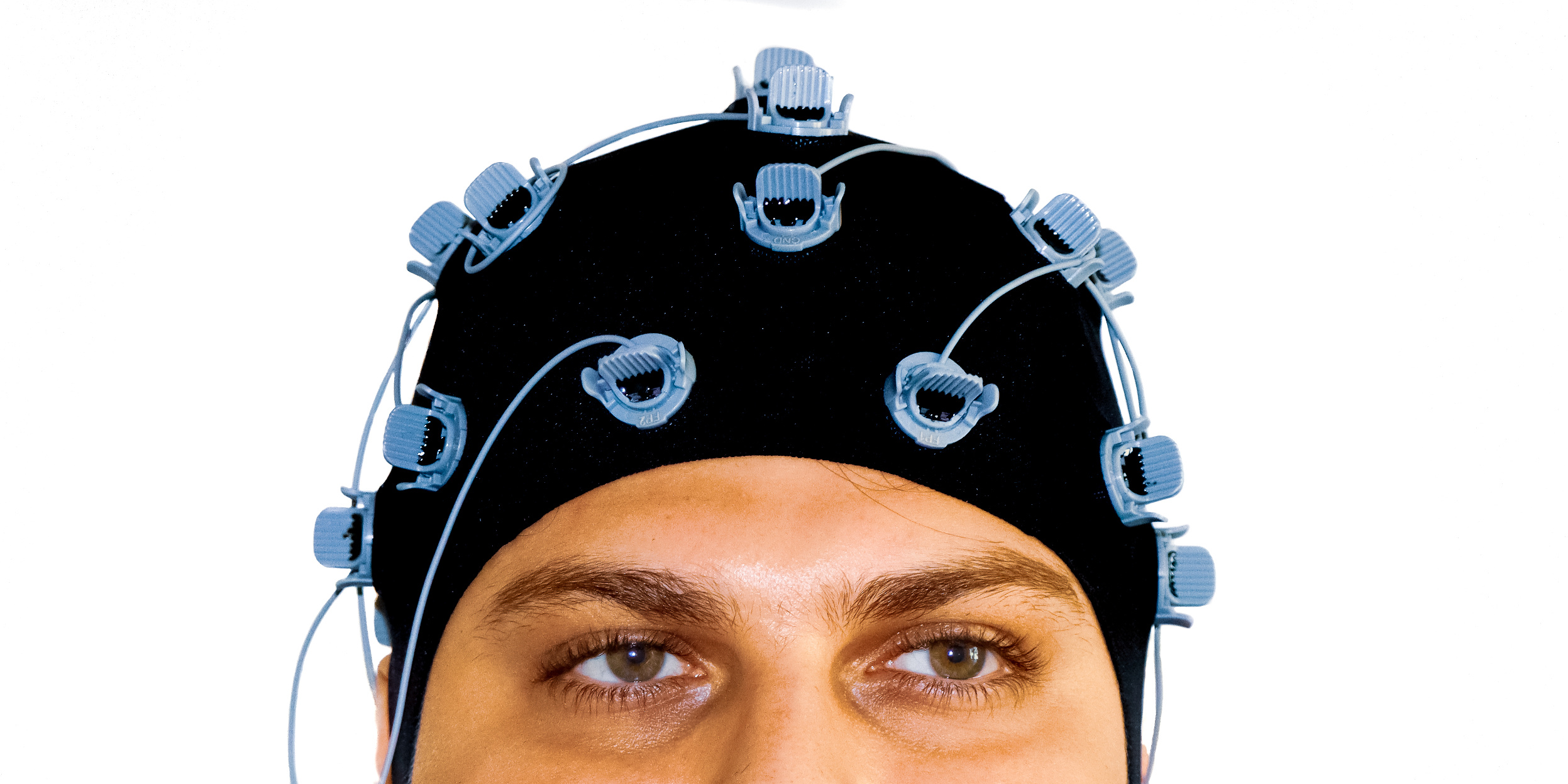

Project BANANA will use brain-computer interfaces (BCIs) to infer users’ preferences and their attentional correlates towards visual content, as measured directly from the human brain.